You’ve spent months refining your AI model, optimizing algorithms, and preparing everything for launch. But suddenly, your model’s performance stalls. It’s not learning as efficiently, and the results aren’t as accurate as expected. The issue? Data quality.

You might assume, “We have tons of data, isn’t that enough?” The truth is, data volume isn’t the problem—it’s about having the right data. Data scarcity and bias are often overlooked, yet they are silent killers of AI potential.

Take the case of a major healthcare provider trying to train an AI model for early-stage skin cancer detection. They needed millions of precisely labeled images, particularly of rare cancer variants. However, their dermatology department only sees 50,000 patients annually, making it nearly impossible to gather enough data. Even if they collected every skin image, it would take decades to build a sufficient dataset.

So, how do they overcome this critical data constraint?

The answer is synthetic data. By creating diverse, scalable and accurate datasets, synthetic data eliminates the issues of data scarcity and bias, helping your AI model learn faster and perform better.

Broken data resulting in crumbling of AI results

The Real Bottleneck Is Not What You Think It Is

Let’s face it: when most people talk about AI, they often focus more on the compute power, faster algorithms, or the next breakthrough in deep learning architectures. Having worked hands-on with AI implementation, we’ve gained deep insights into its real challenges. The biggest bottleneck in AI isn’t what most expect.

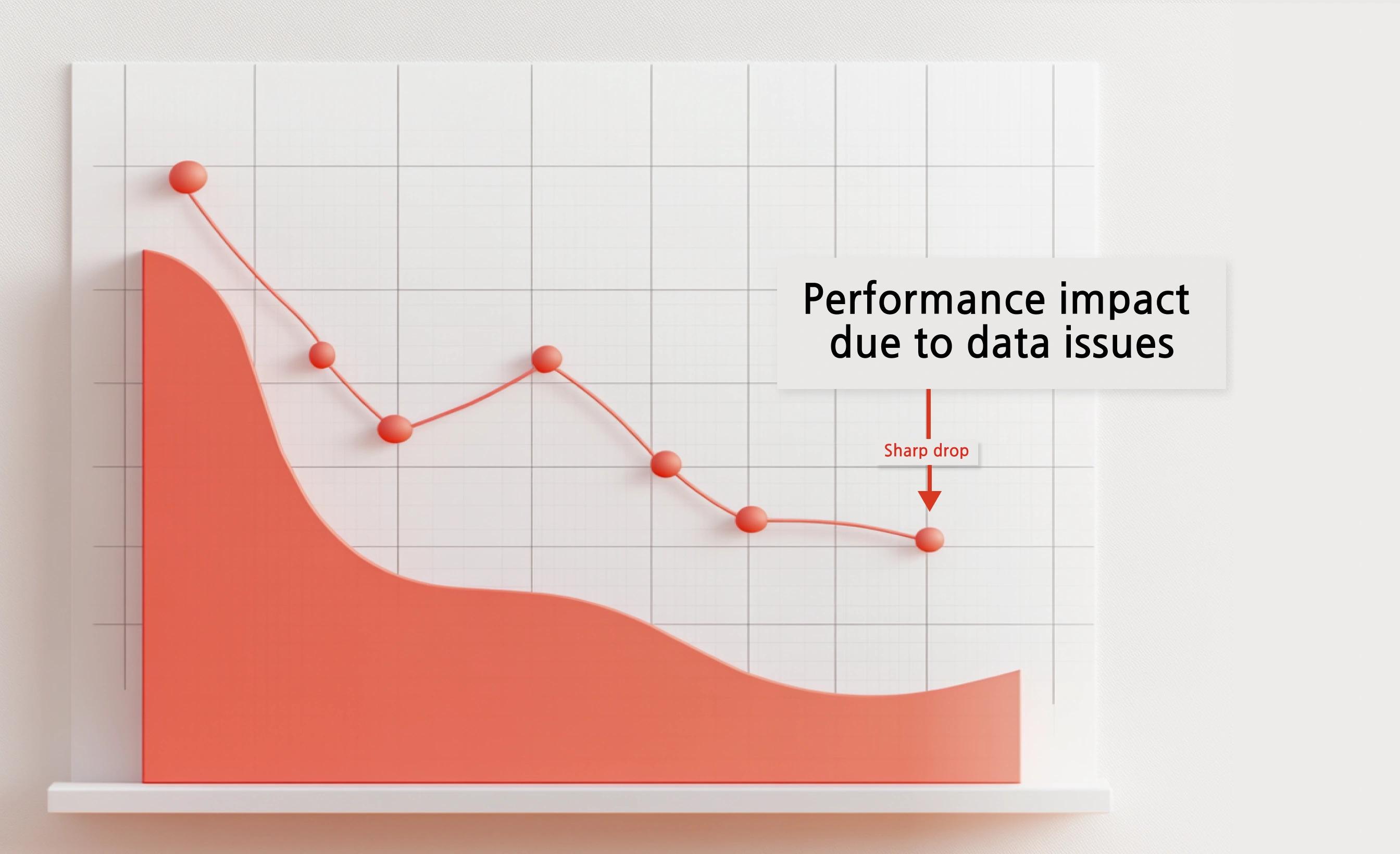

Here's the harsh truth is that 90% of machine learning projects never make it to deployment, and a staggering 96% of organizations struggle with data quality.

You may be asking, "Isn’t the issue compute power or algorithm design?"

While those are certainly important, the real problem lies in the data itself. Traditional data collection methods simply can’t scale fast enough to meet the demands of modern AI systems.

Sharp drop in performance when data issues emerge

Think about training a computer vision model. To get even a basic model working effectively, you need upwards of 100,000 labeled images per class. And that’s just the start.

You need data that’s not only vast but also diverse enough to capture all the possible variations of the object in different settings, lighting, angles, etc. Without that, the model will fail to generalize in real-world applications, just like what happened with Tesla's early autopilot systems, which struggled with edge cases due to insufficient data on rare road conditions.

Now, let’s move to Natural Language Processing (NLP). If you’re working with transformers like GPT or BERT, these models require billions of tokens to understand context and nuances in language.

A single token represents a word or part of a word, and to train a high-performing model, it’s not just about collecting millions of sentences; it’s about gathering a variety of language data across different domains and use cases. Consider OpenAI’s GPT-3, which was trained on 570GB of text data.

Without such a massive and diverse dataset, the model wouldn't be able to understand the subtlety and complexity of human language.

And then there’s rare event detection, which is often the hardest challenge. You need millions of examples for events that happen in less than 0.1% of the edge cases. Think about fraud detection in financial transactions.

Banks like JPMorgan Chase rely on machine learning models to detect fraudulent activity in real time, but fraud typically makes up only a tiny fraction of all transactions. Without a rich and varied set of fraud examples, the model’s performance can degrade quickly, leading to missed detections or false positives.

But here's the million-dollar question: How do we bridge this gap when real-world data collection is constrained by physics, time, and resources?

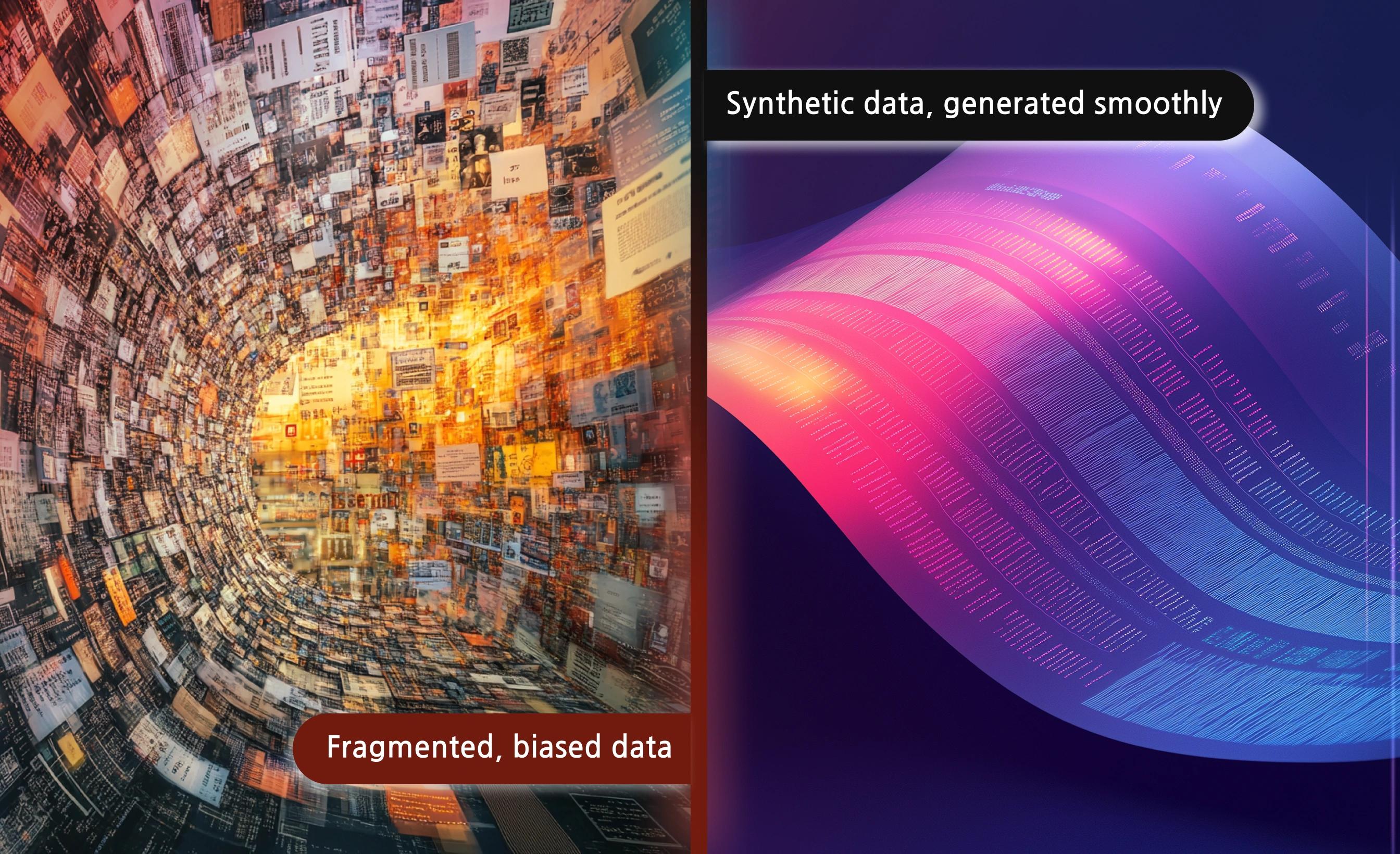

AI's Missing Link – How Synthetic Data Can Solve Your Data Bottleneck

fragmented, biased data vs synthetic data being generated smoothly

We're not talking about simple data augmentation here. Modern synthetic data generation employs sophisticated generative adversarial networks (GANs) and diffusion models to create hyper-realistic data that's statistically indistinguishable from real data. Let's explore and see how it can transform your business.

1. Training a Generative Model with Minimal Data

Traditionally, building a fraud detection model required 5+ years of historical transaction data. But with synthetic data, that’s not necessary.

For example, PayPal, a leading global payments platform, used synthetic data to train their fraud detection systems. Instead of relying on years of customer transaction data, they used 3 months of anonymized data to train a Generative Adversarial Network (GAN), a type of AI model.

This allowed PayPal to generate millions of realistic transactions and detect fraud patterns that would have been impossible to simulate with traditional data gathering methods.

2. Generating Millions of Transactions Quickly

One of the best things about synthetic data is how quickly it can be generated. For example, Visa has been using synthetic data to simulate millions of transactions. With GANs, they can generate detailed, realistic simulations of everything from credit card transactions to merchant purchases.

Why does this matter? Well, think about a fraud detection model that needs 10 million transaction samples to be trained. It could take months (if not longer) to gather and label that many transactions manually.

But with synthetic data, companies like Visa can generate 10 million transactions in just hours and they’re not random. They mirror the statistical patterns of real-world transactions. This saves both time and money.

3. Injecting Controlled Fraud Patterns

The real magic happens when synthetic data is used to inject controlled fraud patterns. This is where synthetic data truly shines, as it allows companies to simulate specific, often rare, fraud scenarios.

Take the example of American Express. When training their fraud detection models, they wanted to simulate a variety of fraud attempts—from credit card theft to account takeovers and return fraud. Instead of waiting for these fraud events to naturally occur (which could take years), they used synthetic data to inject realistic fraud patterns into their dataset.

By controlling the parameters, American Express could simulate subtle fraud attempts, as well as highly sophisticated scams that are harder to detect in real life.

This approach helped American Express create a fraud detection model that could identify fraudulent activity more quickly and accurately, even for highly complex and rare fraud attempts.

4. Validation with Rigorous Statistical Testing

Once the synthetic data is generated, it needs to be validated to ensure that it behaves like real data. This is where statistical tests like the Kolmogorov-Smirnov test come into play.

Here’s how it works: IBM uses synthetic data to simulate customer behavior for training their predictive models. But before using the data, they apply the Kolmogorov-Smirnov test to compare the distributions of the synthetic data with the distributions of real-world customer transaction data.

This step ensures that the synthetic data behaves exactly like real customer data, down to the smallest statistical details.

Are you starting to see the possibilities? But wait, there's more...

Breaking Through- Real Implementation Strategies

Let's talk about how you—yes, you—can implement this in your organization. Let's explore how your organizations can implement AI effectively using frameworks proven across industries to deliver real results.

1. Data Profiling Phase

Can you truly trust data you don’t fully understand? In the Data Profiling Phase, privacy must be your first priority. With regulations like GDPR and CCPA, think of it as building a security perimeter around your data before diving in.

Once privacy is secured, you’ll need to run a statistical distribution analysis to spot subtle outliers whether in healthcare or finance that could skew your insights. Then, perform correlation structure mapping to identify critical connections, like the relationship between stock prices and economic indicators.

2. Generator Architecture Selection

Now that you have a clear understanding of your data, it's time to make some architecture decisions. Choosing the right generator architecture isn’t just about jumping on the latest trend—it’s about aligning the model with your data modality, privacy requirements, and scaling needs.

For instance, in a retail environment with real-time customer data streams, a deep learning model can be ideal for personalized recommendations.

However, in a highly regulated industry like pharmaceuticals, data anonymization and compliance with privacy laws are critical, requiring a more tailored architecture. We at Techolution help you select the right model based on your unique data and business needs, ensuring optimal performance and compliance.

3. Validation Pipeline Development

The real work begins with validation ensuring your model works in the real world, not just on paper. Start by defining statistical similarity metrics to compare model outputs against real-world expectations. For example, in manufacturing, if you're predicting equipment failures, you must validate predictions with historical data. Without this step, you're simply guessing.

But it doesn’t stop there. You also need domain-specific quality checks. In fraud detection, for instance, general validation won’t suffice.

Finally, incorporate adversarial testing. Test your model against potential "bad actors." In cybersecurity, this helps ensure your system can handle data manipulation attempts, preparing for the worst while expecting the best.

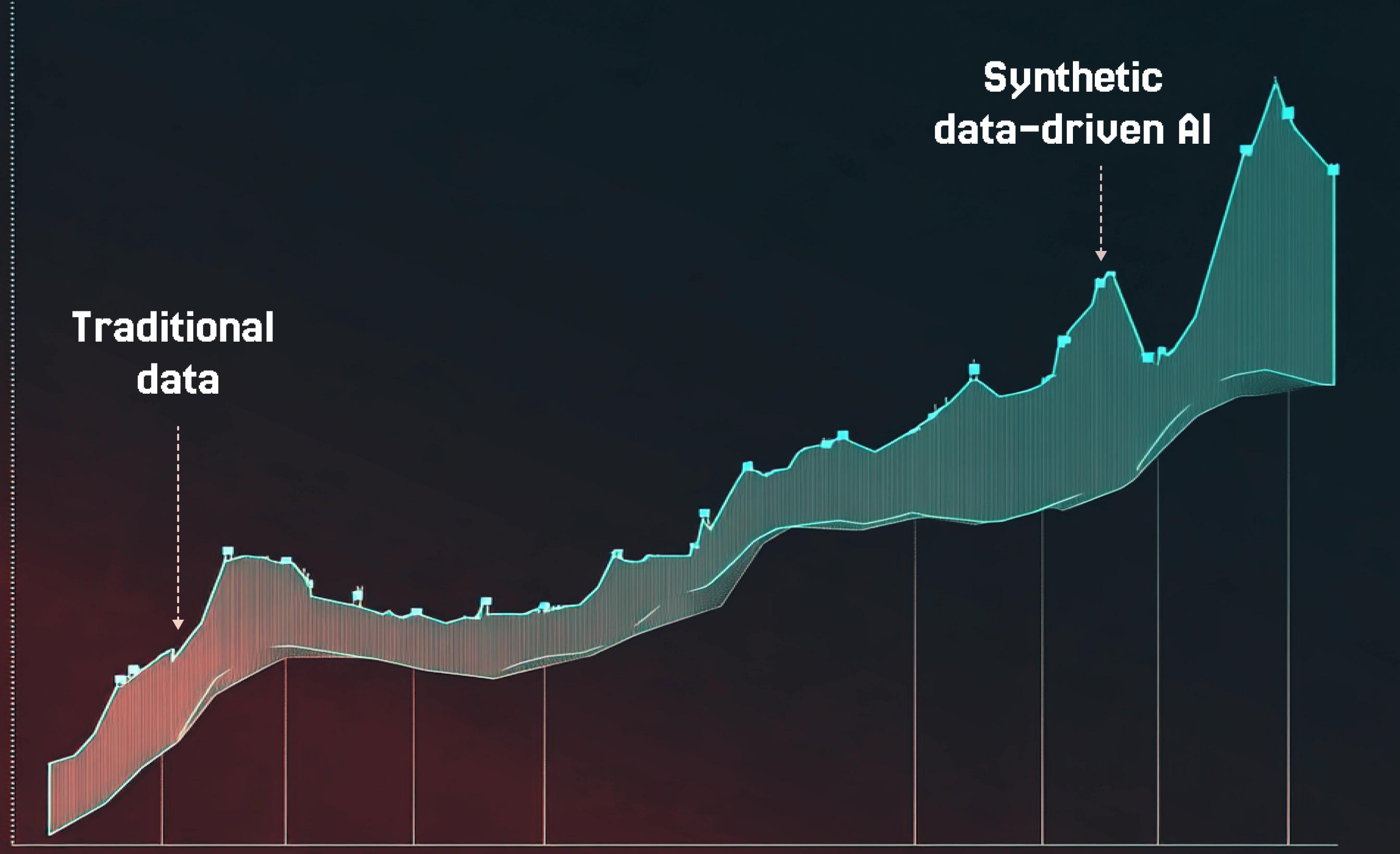

The Future Is Synthetic (And It's Already Here)

Innovation doesn’t wait, and neither should you. The companies that are adopting data are setting the pace. With breakthroughs across industries, the future of data is not just about keeping up—it’s about leading the charge.

progression from traditional data to synthetic data-driven AI

- Healthcare: 2.5x faster model development cycles

- Financial Services: 4x reduction in data collection costs

- Manufacturing: 60% improvement in rare defect detection

Early adopters are already building competitive moats using synthetic data. While others struggle with data collection and privacy compliance, these organizations are iterating faster, training better models, and deploying solutions years ahead of competitors.

Can you afford to wait?

The Next Step in AI-Driven Success

The synthetic data revolution is happening now, and it's time to take action. Start by auditing your current data bottlenecks and identifying high-impact use cases for synthetic data.

Do not let your competitors gain an edge and move ahead of you. Take advantage of the power of synthetic data today to drive innovation and success. The future of AI starts with smarter data, not just smarter algorithms.

The question isn't whether to adopt synthetic data, but when. And the answer, increasingly, is NOW.

At Techolution we help you to overcome data limitations with AI solutions specific to your organization’s needs. Let’s do AI right!